At Google’s annual I/O developers conference, the company announced over 100 product updates, including AI-powered features coming to Android, Bard, Maps, Photos, and Workspace, details about Google’s latest large language models, new ways to preview experimental features in products—including an experience that brings generative AI into Search — and much, much more!

A recurring theme across many of these announcements is Google’s commitment to investing in AI responsibility, which include approaches that will help people identify AI-generated content whenever they encounter it. This also includes building Google’s models to include watermarks and other techniques to ensure every one of its AI-generated images has a markup in the original file to give people context if they come across it outside of Google’s platforms.

Here are some of the announcements:

- Bard, Google’s collaborative AI, is now available in over 180 countries including the Philippines! This means that there will no longer be a need to be waitlisted. Bard will also be going multimodal soon and will be able to combine images and text in prompts and its responses, which will be pulled directly from Google Image Search. Find out more about what’s new with Bard here and start creating with Bard via http://bard.google.com/.

- The introduction of PaLM 2, a next-generation language model with improved multilingual, reasoning, and coding capabilities. It’s responsibly developed, faster, and more efficient than previous models, making it easier to deploy, and it’s already powering nearly 20 Google products and features, including the recent Bard coding update. Read more about PaLM 2 here.

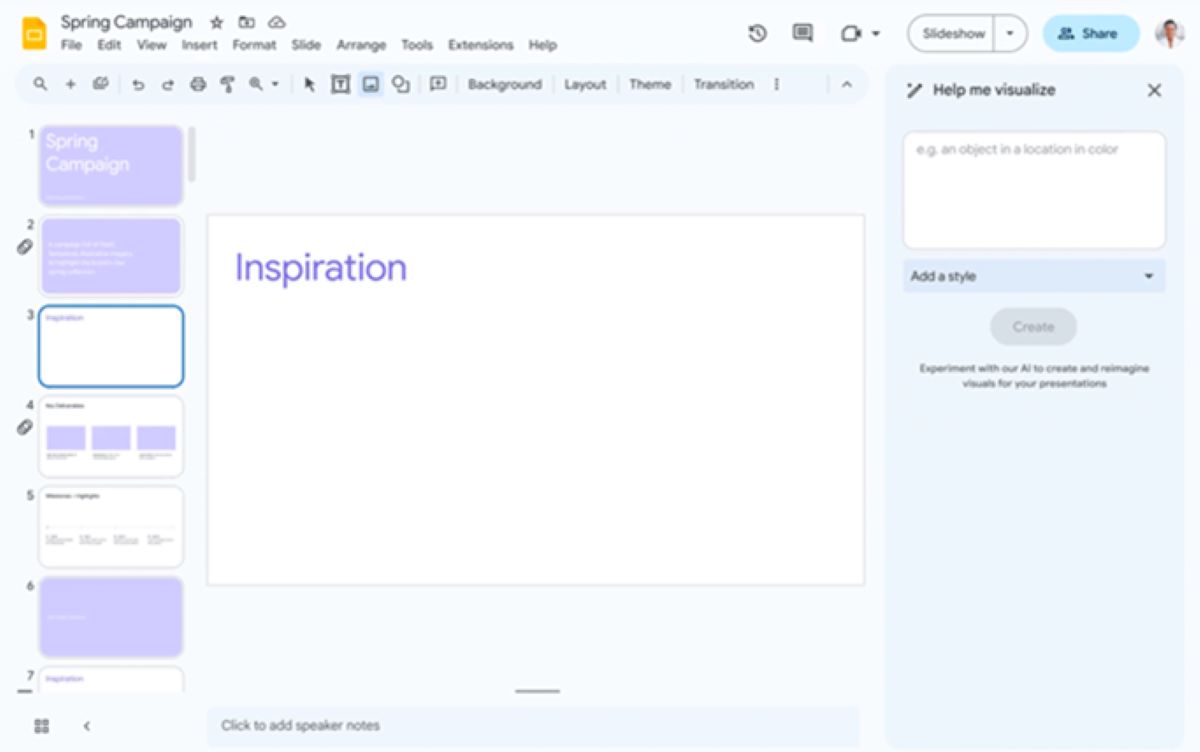

- New generative AI features in Google Workspace that enable people to collaborate in real time with AI. This includes tools to help write in Gmail and Docs, automatic table generation in Sheets, and image generation in Slides and Meet among others. Take a look at what’s coming to Workspace here.

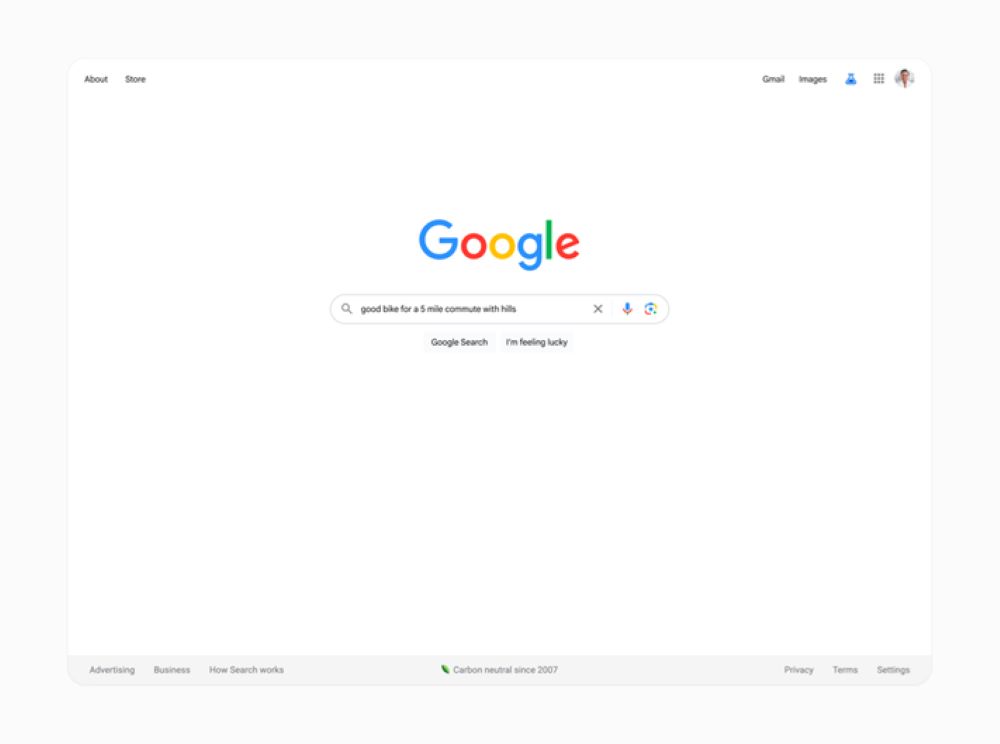

- Early sign-ups to Search Labs, a new way for people to test early-stage experiments and share feedback directly with the teams working on them. It features SGE (Search Generative Experiences), an experience where people can get the gist of a topic they’ve searched for with AI-powered overviews, pointers to explore more, and ways to naturally follow up; Code Tips, which uses large language models to provide pointers for writing code faster and smarter; and Add to Sheets, which helps insert a search result directly into a spreadsheet and share. Get more details about Search Labs here.

- Bringing Immersive View to routes in cities around the world in Google Maps. The feature uses computer vision and AI to fuse billions of Street View and aerial images together to create a multidimensional user experience that helps you plan your journey more effectively. Check out how Immersive View for routes works here.

- A sneak peek of Magic Editor in Google Photos, an experimental editing experience that uses generative AI to help anyone easily make complex edits to their own photos. Coming first to Pixel later this year, Magic Editor allows moving people and things around, resizing them, and replacing distractions to improve the overall composition of a photo. Learn how it can help you reimagine your photos here.

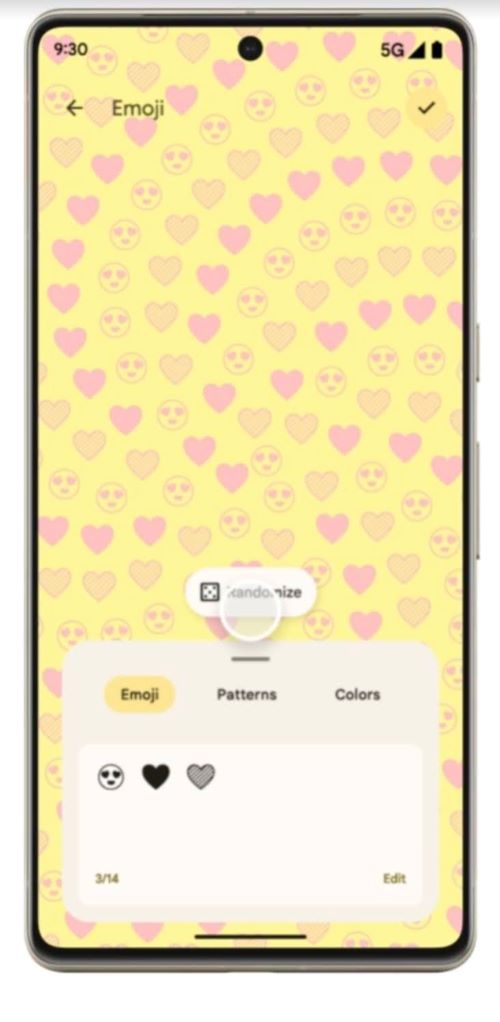

- The addition of Magic Compose, Emoji Wallpaper, and Cinematic Wallpaper in Android. People can now create custom backgrounds in Messages using generative AI. Explore how to make Android devices unique here.

- Vertex AI updates from Google Cloud. This includes new features, foundation models, and APIs that give customers the ability to generate text, images, and code from simple natural language prompts. See what’s coming to Vertex AI here.

For a full list of the big announcements, go to https://io.google/2023/.