New research from iProov, the world’s leading provider of science-based biometric identity verification solutions, reveals that most people struggle to detect deepfakes—highly realistic AI-generated videos and images designed to impersonate real people. The study, which tested 2,000 UK and US consumers, found that only 0.1% of participants could accurately distinguish real content from deepfakes.

Key Findings

Deepfake detection is alarmingly low – Only 0.1% of respondents correctly identified all deepfake and real stimuli (images and videos) even when primed to look for them. In real-world scenarios, where awareness is lower, the vulnerability is likely even greater.

Older generations are more vulnerable – About 30% of 55-64 year-olds and 39% of those aged 65+ had never heard of deepfakes, highlighting a significant knowledge gap that increases susceptibility to deception.

Deepfake videos are harder to detect than images – Participants were 36% less likely to correctly identify a synthetic video compared to a synthetic image, raising concerns about fraud risks in video calls and identity verification.

Deepfakes are widely misunderstood – While awareness is rising, 22% of consumers had never heard of deepfakes before participating in the study.

False confidence is rampant – Despite poor detection skills, over 60% of participants believed they were good at spotting deepfakes. This overconfidence was particularly pronounced among young adults (18-34 years old).

Social media trust erodes – Platforms like Meta (49%) and TikTok (47%) are seen as the primary sources of deepfake content, leading 49% of consumers to trust social media less after learning about deepfakes. However, only one in five would report a suspected deepfake.

Deepfakes fuel societal concerns – 74% of respondents worry about the impact of deepfakes, with 68% citing fake news and misinformation as their primary concerns. Among older adults, concern rises to 82%.

Lack of reporting mechanisms – 48% of consumers don’t know how to report deepfakes, and 29% take no action at all when encountering one.

Consumers fail to verify online information – Despite the rising threat, only 11% critically analyze sources, while only one in four actively search for alternative sources when they suspect a deepfake.

Expert Insights and Industry Impact

Professor Edgar Whitley, a digital identity expert at the London School of Economics and Political Science, highlights the growing challenge: “Security experts have long warned of the threats posed by deepfakes. This study confirms that organizations can no longer rely on human judgment alone and must adopt advanced authentication solutions to combat deepfake risks.”

iProov CEO Andrew Bud reinforces the urgency: “With just 0.1% of people able to accurately identify deepfakes, both consumers and organizations remain highly vulnerable to fraud. Criminals are exploiting this blind spot, putting personal and financial security at risk. Technology companies must take responsibility by implementing robust biometric security measures, such as facial authentication with liveness detection, to protect against these evolving threats.”

The Growing Threat of Deepfakes

Deepfake technology is advancing rapidly. iProov’s 2024 Threat Intelligence Report revealed a 704% increase in face swaps, a technique commonly used in deepfakes, over the past year. These AI-generated forgeries allow cybercriminals to impersonate individuals, gain unauthorized access to sensitive data, and create fraudulent identities for illicit activities such as loan applications and financial scams. The ability to distinguish truth from fabricated content is becoming increasingly difficult, with major implications for security, trust, and misinformation.

What Can Be Done?

As deepfakes become more sophisticated, human detection alone is no longer reliable. Organizations must adopt advanced biometric technology with liveness detection—verifying that an individual is:

- The correct person

- A real person

- Authenticating in real-time

To stay ahead of evolving deepfake techniques, businesses and governments must implement continuous threat detection, improved security protocols, and collaboration with policymakers to combat digital identity fraud effectively.

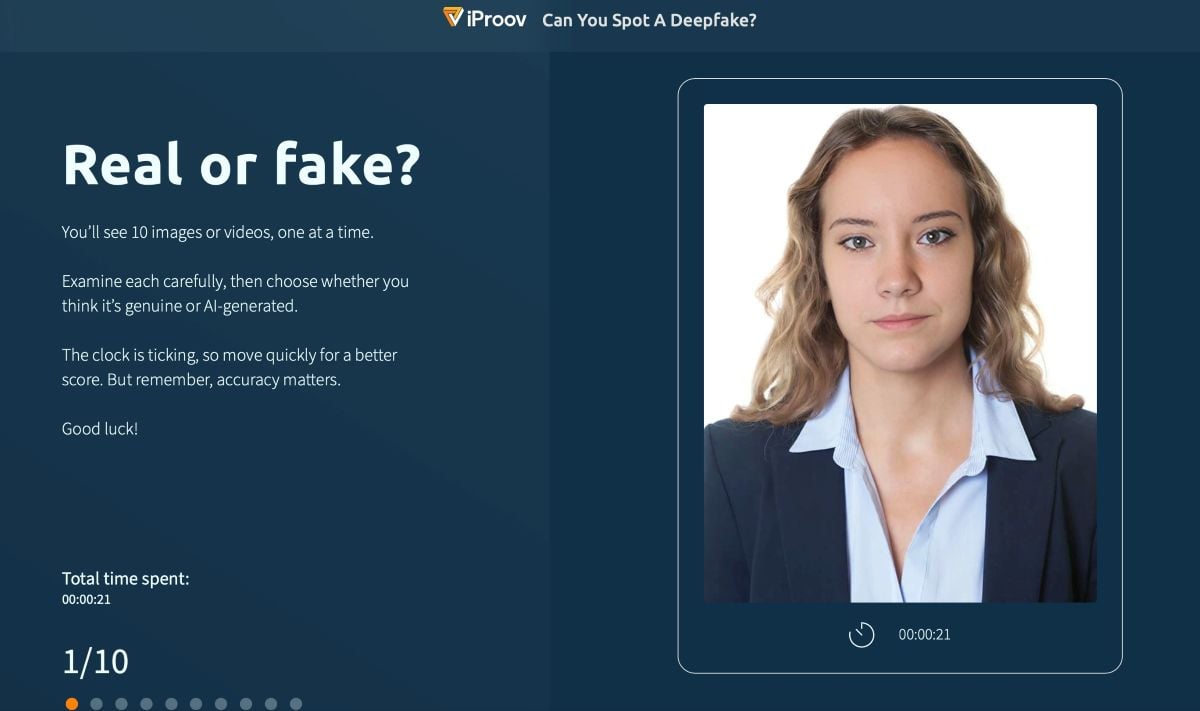

Take the Deepfake Detection Test

Think you can spot a deepfake? Put your skills to the test! iProov has created an interactive online quiz that challenges users to distinguish between real and AI-generated content. Take the quiz and see how you score.